A long lull, Certificate Authority distrust issues and a platform migration.

The last year or so has been interesting, moving from “normal” work to consulting, a great change! With the drawback of having less time to commit to getting stuff on here as well as greater concerns over intellectual property rights and confidentiality.

The various blogs/ sub-domains that I run, covered under the oholics.net moniker, all had free certificates issued by the (now effectively defunct) StartCom CA. In October 2016, Mozilla started to distrust them – backstory here: https://blog.mozilla.org/security/2016/10/24/distrusting-new-wosign-and-startcom-certificates/.

Initially, it looked like the low traffic sites were those that were affected (they had the newest certificates), so I kind of took the lazy approach of just leaving them as is. I figured that that start.com would get their new CA in place and trusted reasonably quickly, not so! I recently noticed that Mozilla/ Chrome had started distrusting even those certificates that were generated prior to October 2016. So now all of the blogs were generating security errors, this was not ideal. I looked at moving to Let’s Encrypt, but it would not have been a simple migration – more than a five minute job. Then, a few weeks ago, I noted that start.com had their new CA in place. Great! but when I asked their support people about global trust, the answer was “not yet”, with no idea of when that would be in place.

I was still running the hosting platform from home, which was less than ideal, given the lack of a fixed IP and intermittent issues with Dynamic DNS not updating, plus the running costs/ fire risk etc….

So, I recently made the decision to migrate the platform to a cloud provider and to get the certificates issues resolved properly, moving finally to Let’s Encrypt. On a fresh server, it was remarkably easy to setup, just requiring a little DNS Flip-Flopping to get things in order.

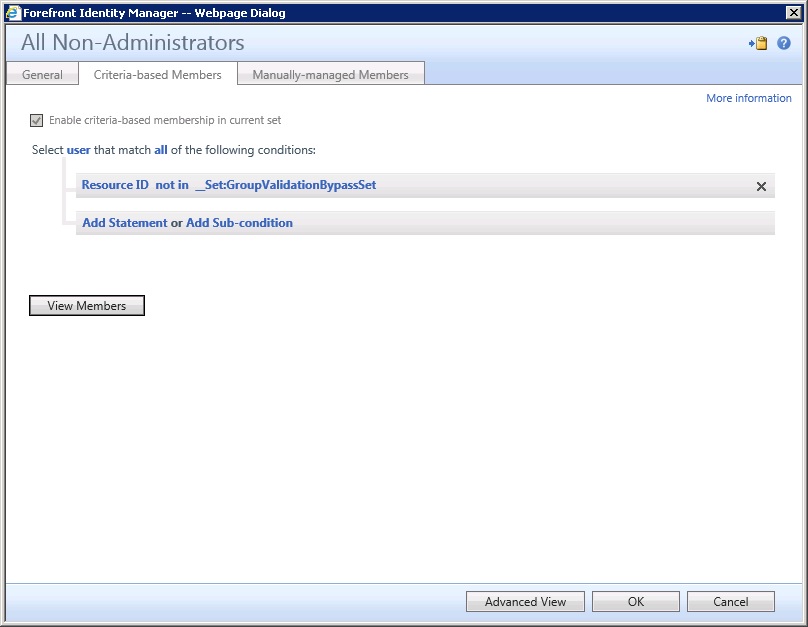

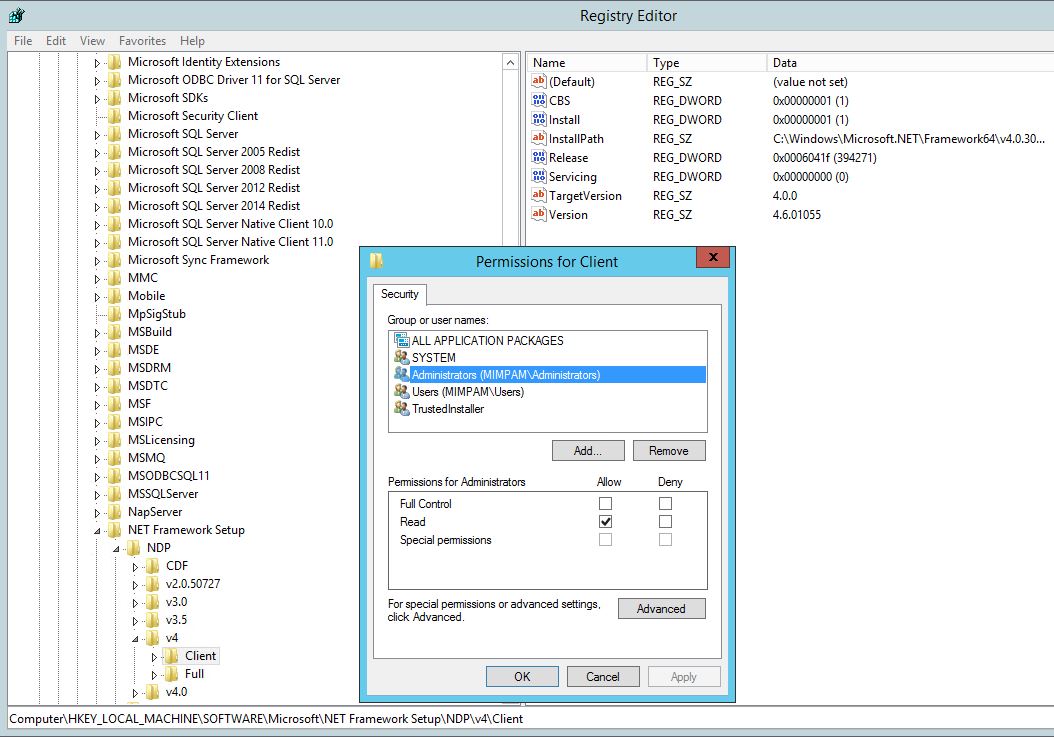

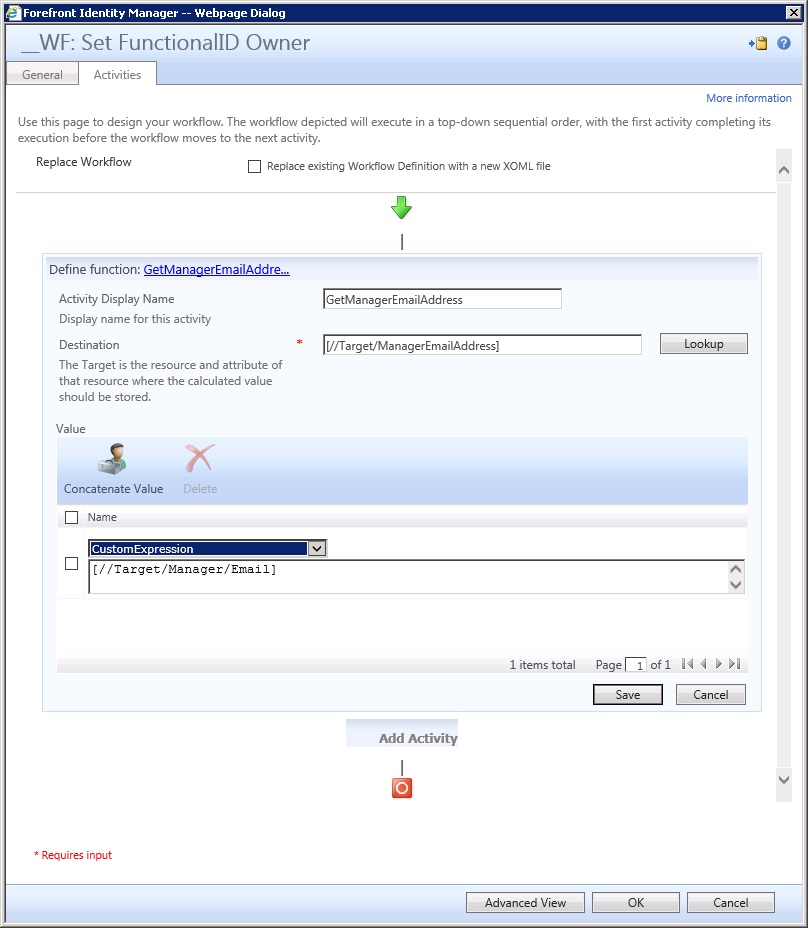

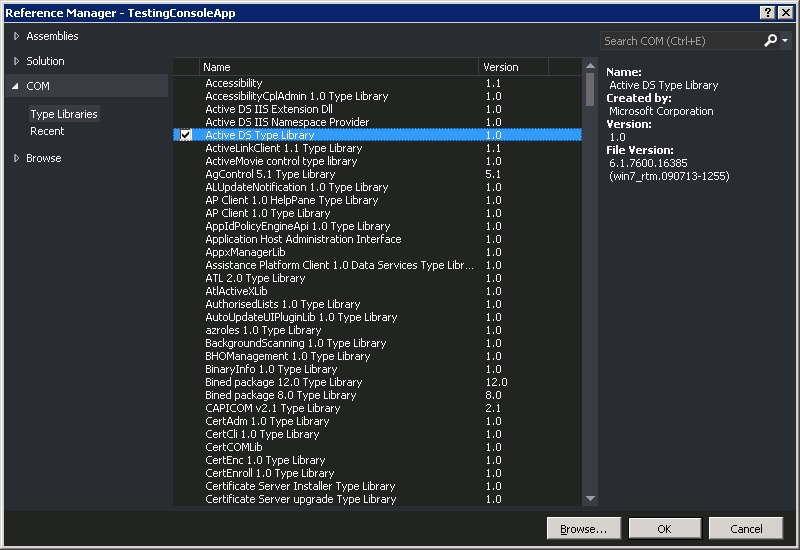

Now it is all in place/ tidy, I have a bunch of stuff to add to the blog. However, FIM is going EOL, so the name of this blog is going to become defunct too! Managing and maintaining the other blogs as separate entities is a bit of a PITA too. Therefore, I plan to (eventually) migrate content from all 4 blogs into one new core blog site – name TBD. I may add some stuff here in the meantime, just to get it out of my brain and onto paper, so to speak… else I may wait until I have done the migration component – depends on how long it might take..

Until then, I hope the previous content still provides a good repository for FIM “stuff” for others as well as myself 🙂