Hiding Portal Buttons from Normal Users

Recently, one of my co-workers was assigned to work with me to learn some FIM and to help, where possible, with some configuration.

One of the things on my list of “things to do” was to find a way of hiding certain portal buttons from a normal users view – after all they are not (currently) going to be allowed to use the portal for any self service task yet – that might come later….

So, I sent my co-worker off to find and implement the solution in the test environment, thinking “It can’t be that hard…”

I had already implemented the following solution to hide New and Delete User buttons:http://blogs.msdn.com/b/connector_space/archive/2014/10/02/5-minute-fim-hacks-hiding-the-quot-new-quot-and-quot-delete-quot-user-buttons.aspx

A week later we met to see how much progress had been made, he had found the following information (http://social.technet.microsoft.com/wiki/contents/articles/2139.how-to-remove-new-delete-and-other-buttons-from-fim-portal-pages.aspx), but didn’t really understand what it meant. So, we went through it together. I must admit, when I first read it through, it didn’t make a lot of sense to me either, but after a few re-reads it clicked into place.

This was done around 1 month ago and already I’m forgetting the actions that were carried out, so I’m going to document the steps in a more verbose fashion here for future reference.

Create new search scopes, using the original Search Scopes’ values as the basis. The resource type shold be “Resource”.

I had already setup a new Usage Keyword “PrivilegedUsers”, that I was using to control the things that the helpdesk users would see to do their admin tasks. So, in the original search scopes this keyword needs to be added and the BasicUI and GlobalSearchResult keywords should be removed. Then in the (new) copies of the search scopes, BasicUI and GlobalSearchResultshould be present.

Original and New Search Scope Usage Keywords:

Do an IIS reset….

Now on the home page, choose one of those new search scopes and do a “blank” search. Copy the URL that this search went to – note that the UI of this search presents only the details button.

Do the same thing for each of the new search scopes, copying the URL’s for later….

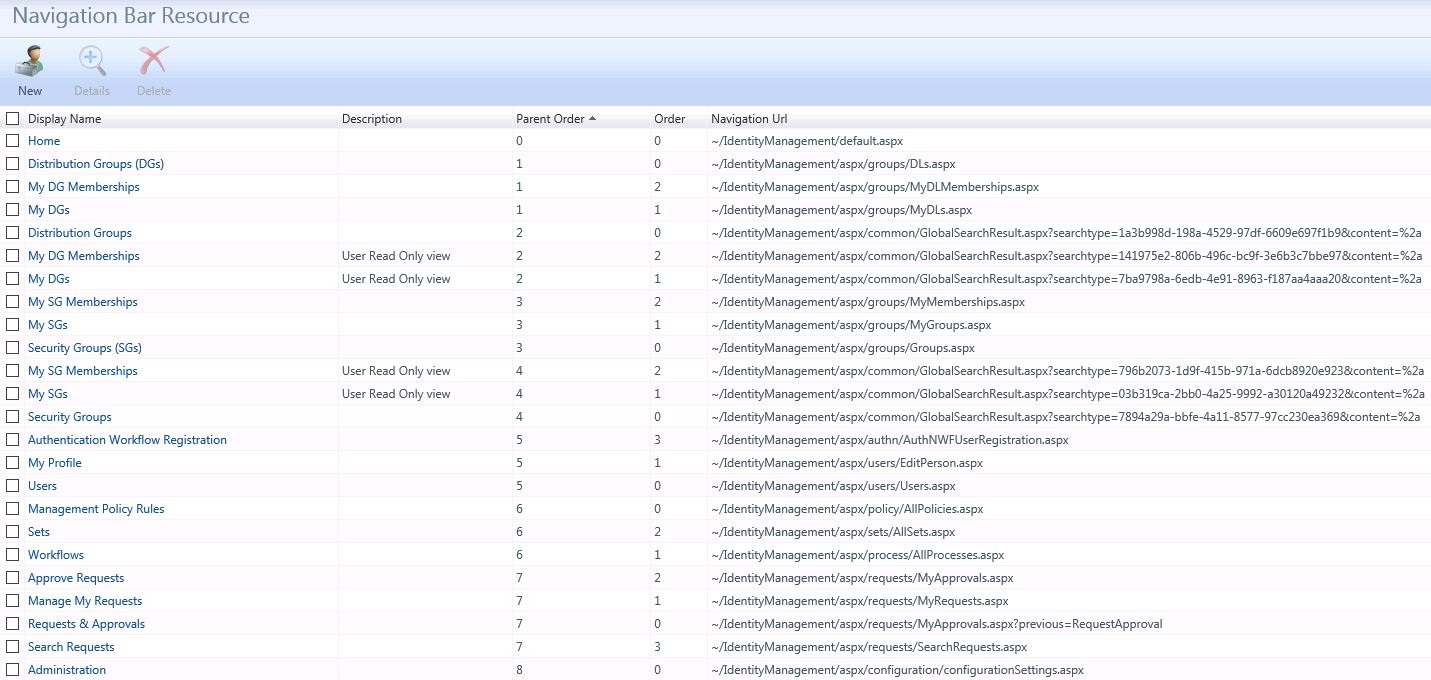

Now we need to make those URL’s available via the portal UI. We need to do this for both the Navigation Bar and Home Page, so create new resources, using the information within the originals as the basis. The only change that I made was to remove the reference to (DGs) and (SGs) for the top level items and to add a description “User Read Only view” for those new items with the same name (so that I could tell the difference):

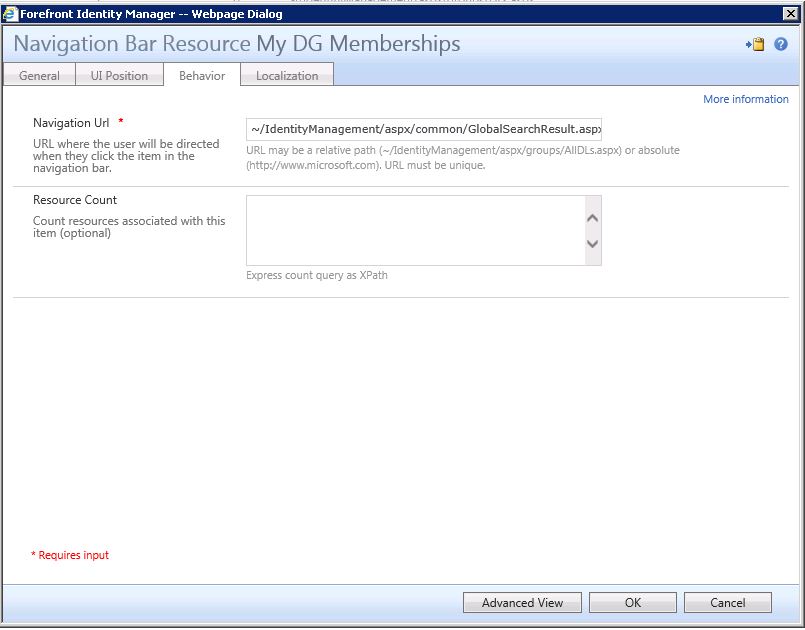

Use the URL’s that were obtained from the search scopes to define the behaviour of the Resource:

Do an IIS reset….

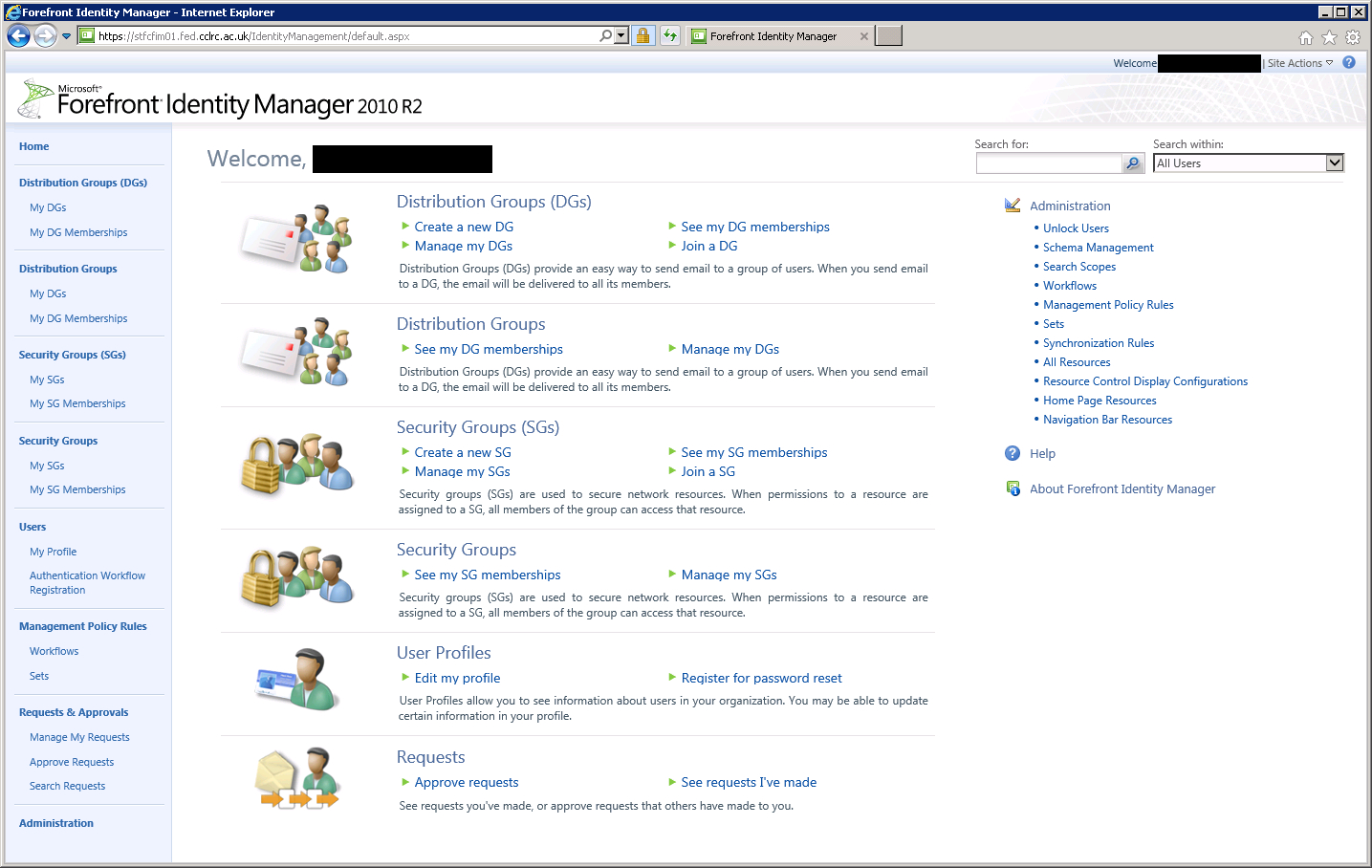

If you copied the Parent Order and Order values of the original Resources, the result is a little messy from the Administrators POV, as all of those items are crammed together. Unfortunately, I know of no way to hide an item from the admin, so I just rearranged the order of the layout. It displays as follows after re-arranging both the Nav Bar and Home Page Resource orders:

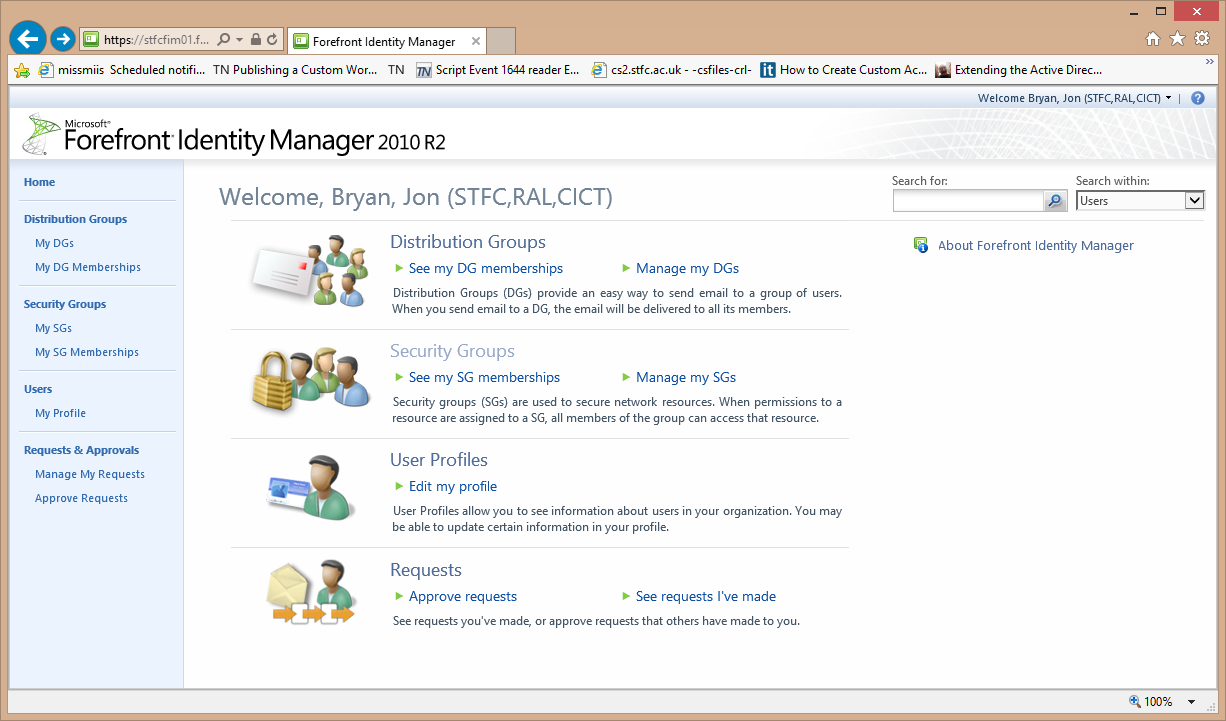

A normal user looking at the portal sees this, the links go to the URL’s in those Search Scopes defined earlier:

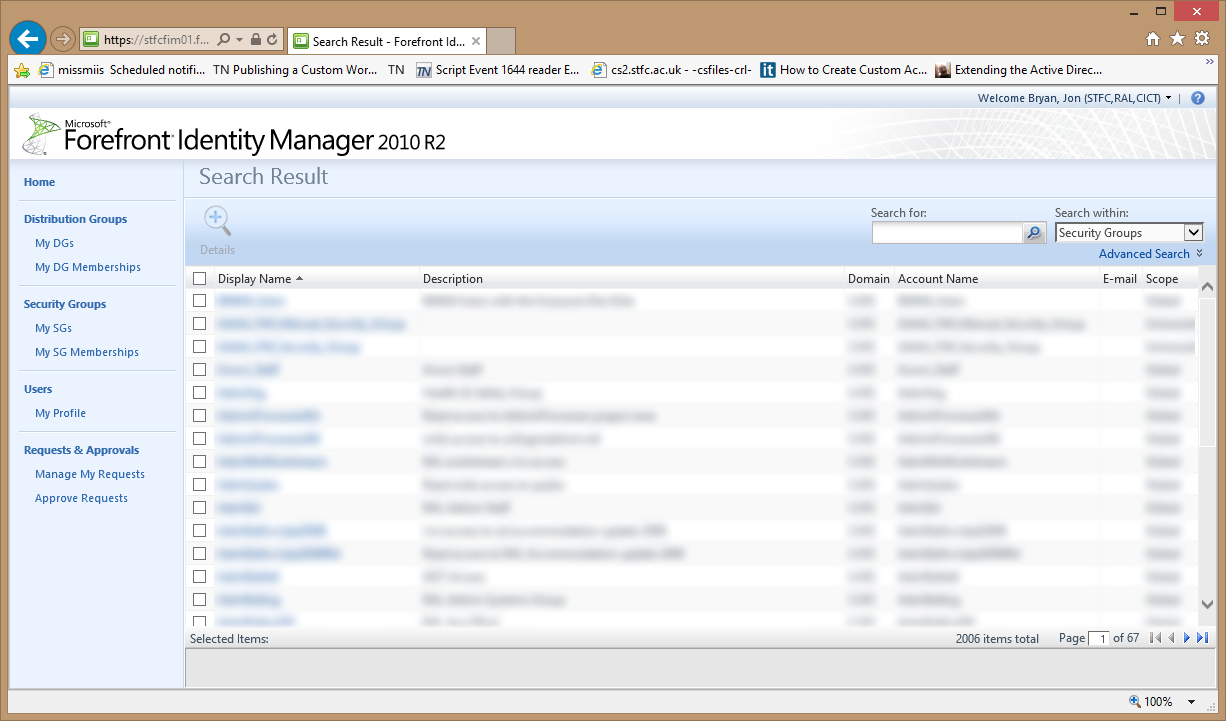

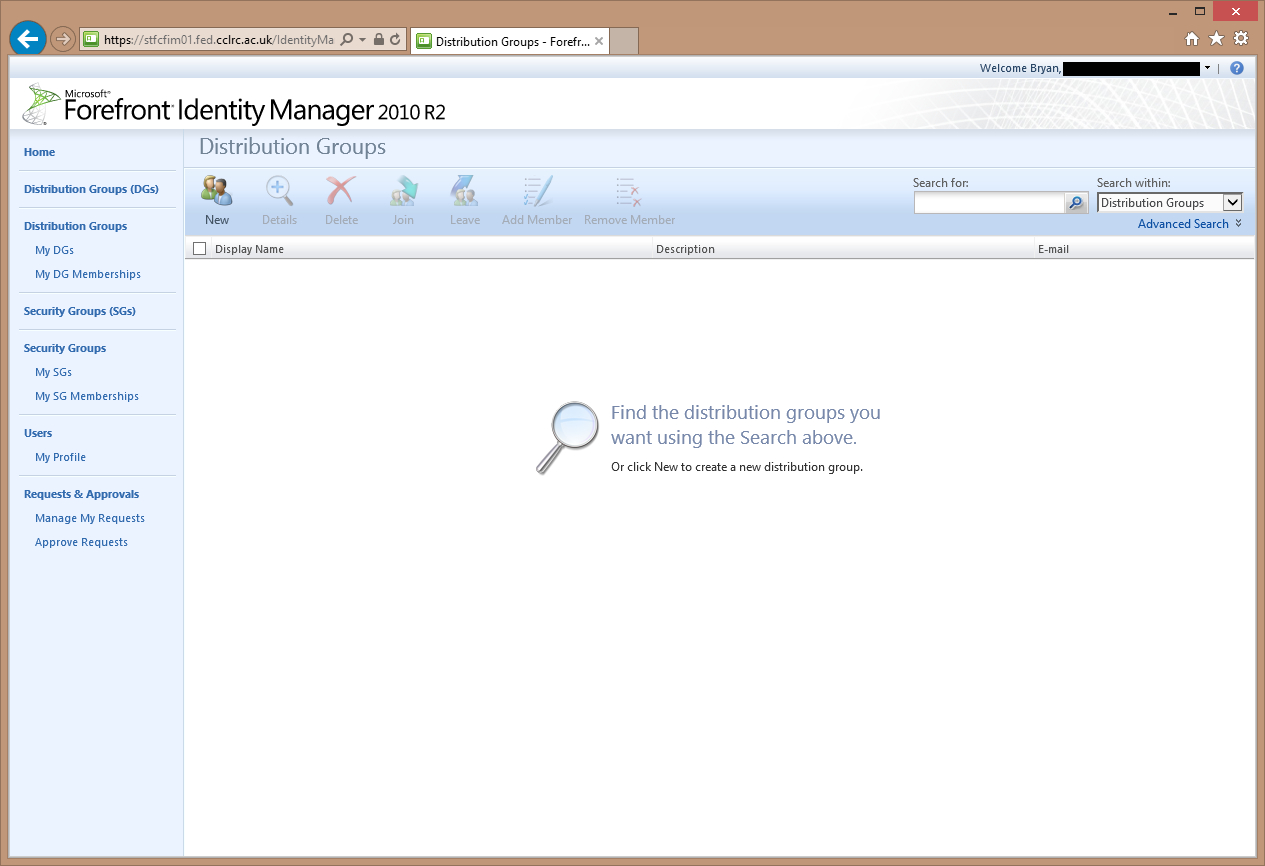

And when looking at for example “Distribution Groups”, sees that Details button only:

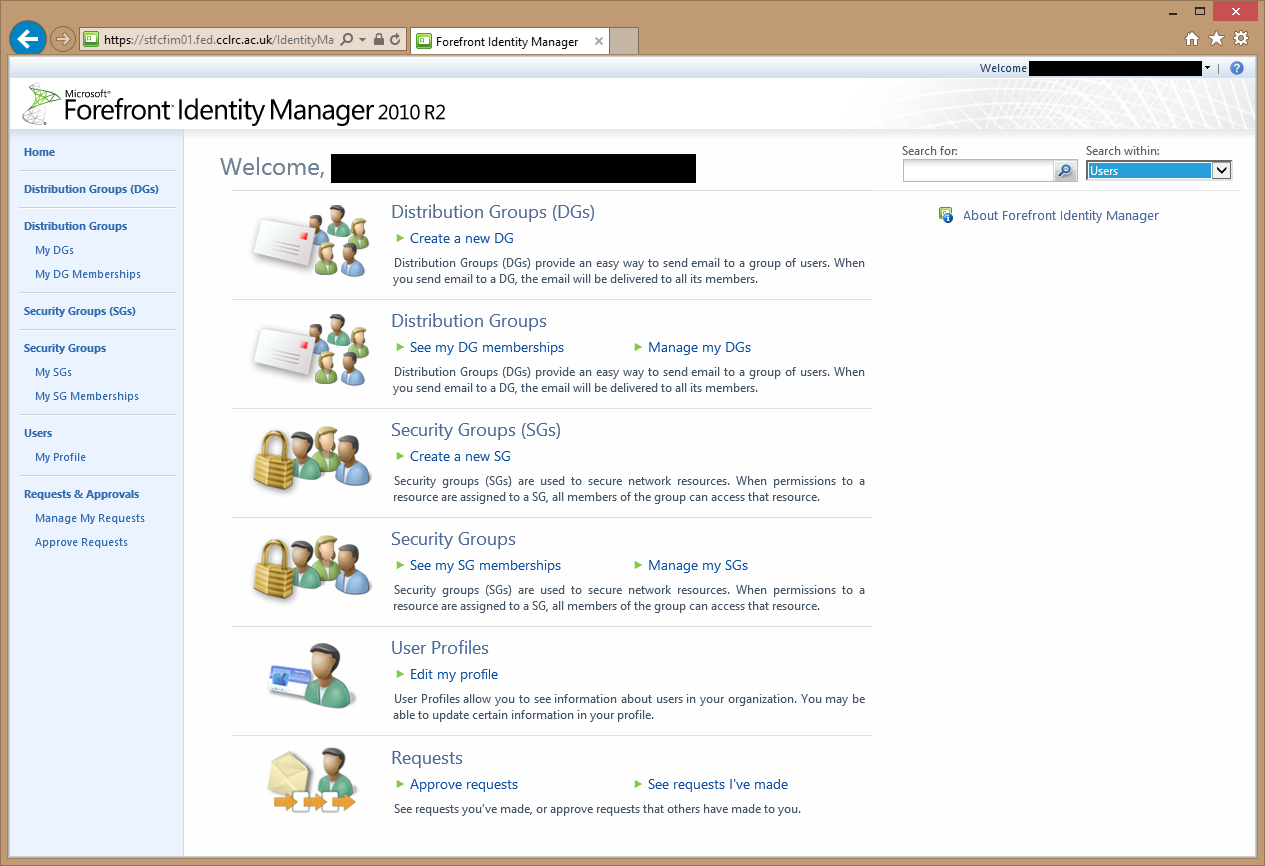

The PrivilegedUsers view, looks like this:

And if a member of PrivilegedUsers uses the Distribution Groups (DGs) link, they see this – note all of the buttons are available:

If I remember missing something or something not being quite right, I’ll be back to correct…